You need to configure the TACACS+ server ( Cisco ACS in my case) and assign the right shell values to the groups.

In the Cisco UCS add a TACACS+ provider in the Admin tab user management > TACACS+ > TACACS+ Providers.

Click on the green plus button to add a provider. In my case its the ACS server.

Enter the IP address of the ACS server

Enter the shared secret key provided by the ACS admin

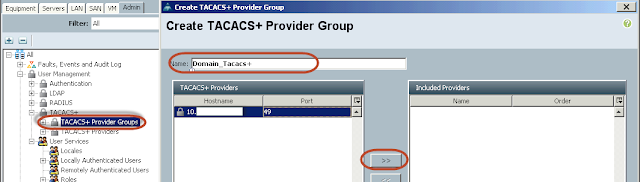

Add the provider to the provider group.

On the TACACS+ provider group click the green plus sign to add a provider to the group.

Give the group a name

Highlight the provider we added on the left window and click on the >> sigh to add the provider to the group.

Create an Authentication domain:

On the Authentication domain , add a new authentication domain and give the Provider group you just created in the drop down list and choose realm as tacacs.

Go to the Native Authentication and change the default Authentication to TACACS.

here is the critical part, leave the Console Authentication to Local in case your tacacs configuration didn't work and you are kicked out of the GUI, you can still login via console and reset things.

You will automatically logged out from the GUI after some time and you need to login with your login id which authenticates with tacacs server.

You have drop down list in the domain column and choose the tacacs domain you created a while ago.

If you are not able to log-in and get an error troubleshoot it with tacacs+ server settings, Also note that you are not able to log-in because you choose no-login as the Role policy for remote users in the Native authentication page.

If you choose Assign default role there then you will be given login access but read-only. UCS by default assigns read only role to the users who is not configured locally.

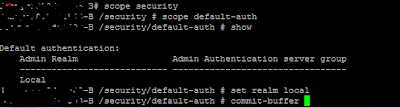

If you have trouble and want to revert back the configuration:

SSH as admin into the primary/cluster IP address and set the default-auth to local

then log back as local user ( native)

In the Cisco UCS add a TACACS+ provider in the Admin tab user management > TACACS+ > TACACS+ Providers.

Click on the green plus button to add a provider. In my case its the ACS server.

Enter the IP address of the ACS server

Enter the shared secret key provided by the ACS admin

Add the provider to the provider group.

On the TACACS+ provider group click the green plus sign to add a provider to the group.

Give the group a name

Highlight the provider we added on the left window and click on the >> sigh to add the provider to the group.

Create an Authentication domain:

On the Authentication domain , add a new authentication domain and give the Provider group you just created in the drop down list and choose realm as tacacs.

Go to the Native Authentication and change the default Authentication to TACACS.

here is the critical part, leave the Console Authentication to Local in case your tacacs configuration didn't work and you are kicked out of the GUI, you can still login via console and reset things.

You will automatically logged out from the GUI after some time and you need to login with your login id which authenticates with tacacs server.

You have drop down list in the domain column and choose the tacacs domain you created a while ago.

If you are not able to log-in and get an error troubleshoot it with tacacs+ server settings, Also note that you are not able to log-in because you choose no-login as the Role policy for remote users in the Native authentication page.

If you choose Assign default role there then you will be given login access but read-only. UCS by default assigns read only role to the users who is not configured locally.

If you have trouble and want to revert back the configuration:

SSH as admin into the primary/cluster IP address and set the default-auth to local

then log back as local user ( native)